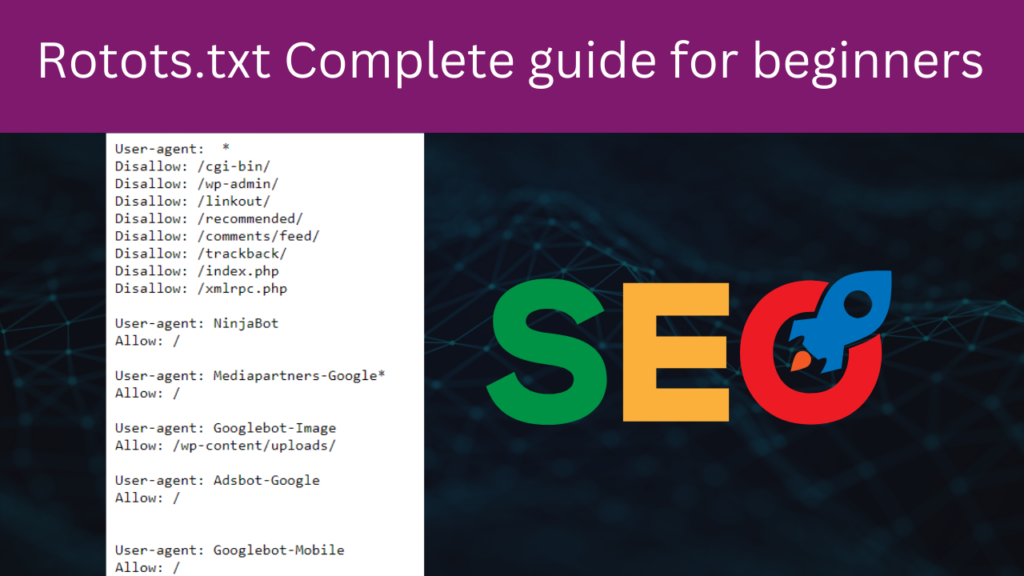

What is a Robots.txt File, and How to Add It to Your Blog (SEO, Generator, Checker, Blogger, WordPress, Use)

Is your blog post not being crawled and indexed as you would like? Do you know what a Robots.txt file is? What are the benefits of a Robots.txt file? How do you add a Robot.txt file to Blogger and WordPress? Often, new bloggers face a lot of problems due to not properly understanding the Robots.txt file.

According to Neil Patel, the co-founder of NP Digital, a Robots.txt file is a small text file for every website post available on the internet. The Robots.txt file is specifically created for search engines and SEO. It greatly helps in ranking your posts. However, many bloggers do not understand its value. Are you one of them? If yes, this is not good for your website.

If you want your website to rank well in search engines like other bloggers, then you will find all the information related to the Robots.txt file in this article, explaining what a Robots.txt file is and why it is important for SEO.

This article is going to be very useful for you, so don’t miss any points. Let’s start with what a Robots.txt file is (Robots.txt File Meaning).

What is Robot.txt File?

The Robots.txt File is also known as the robots exclusion protocol. It is known as a text file. The Robots.txt File informs Google’s Web Robots about what in your Post is worth crawling and what is not. The Robots.txt File sends the commands given by you to the Search Engine.

Many bloggers still do not properly understand what a Robots.txt File is. From the name Robots.txt File, it can be guessed that it is an extension of a Text File where you can only write text.

In other words, if you want to show or hide a Page from your Blog in the Search Engine, you can specify this in the Robots.txt File.

Later, when a search engine like Google, Bing, or Yahoo starts to index your Post, a directive from the Robot.txt File will go to the search engine. This message will indicate which parts of the Blog should be indexed and which should not.

The biggest advantage of this is that your Blog Post becomes SEO-friendly. This makes it easy for the search engine to understand which part to index and which not to index.

Why is the Robots.txt file important for SEO?

The Robots.txt file is crucial for SEO for several reasons:

- Directs Search Engine Bots: It tells search engine bots which pages or sections of the site should not be crawled and indexed. This helps in preventing search engines from accessing parts of the site that might not be public or are not important for SEO.

- Prevents Indexing of Duplicate Content: By disallowing certain URLs, you can prevent search engines from indexing duplicate content or pages that could dilute your SEO efforts.

- Efficient Use of Crawl Budget: Search engines allocate a crawl budget for each website, which is the number of pages a search engine bot will crawl on your site within a certain timeframe. By preventing unnecessary pages from being crawled, you ensure that important content is crawled and indexed more efficiently.

- Protects Sensitive Information: Robots.txt can be used to prevent search engines from indexing sensitive pages like admin pages or certain user data, ensuring privacy and security.

- Improves Site Structure Visibility: By guiding bots to crawl and index the important parts of your site, you help improve the overall visibility of your site’s structure in search engine results, potentially leading to better SEO outcomes.

However, it’s important to use the Robots.txt file carefully. Incorrect use can accidentally block important pages from being indexed, negatively impacting your SEO efforts.

What are the benefits of the Robots.txt File?

Now that you have understood what the Robots.txt File is and why it is important for SEO, let’s learn about the benefits of the Robots.txt File.

- The Robots.txt File can make any section of a Website private.

- Through the Robots.txt File, any low-quality pages of a website can be blocked.

- The Robots.txt File is very useful in improving websites with poor SEO.

- The Robots.txt File gives instructions to search engine bots on how to properly crawl and index content.

- The Robots.txt File tells search engine bots which parts of the content to crawl and which parts not to.

Important Things Related to the Robots.txt File

Now, I will tell you about some important things related to the Robots.txt File that are used in the Robots.txt File.

| Directive | Description | Additional Information |

|---|---|---|

| Allow | Specifies which files or directories a web crawler is allowed to access. | Used to allow access to specific content. |

| Disallow | Instructs web crawlers not to access specific files or directories. | Used to block access to specific content. |

| User-Agent | Identifies the specific web crawler to which the rule applies. | Used to target rules for specific crawlers. |

| User-Agent: Media Partners Google | Used specifically for Google’s media partners, often in context with displaying ads. | Use carefully, especially with AdSense. |

| Sitemap | Specifies the location of the sitemap. This can help search engines to discover your content faster. | Not a rule but helpful for search engines. |

| Crawl-Delay | Sets a delay between the crawl requests to the server to prevent server overload. | Not officially supported by all search engines. |

How to Add Robots.txt File in Blogger?

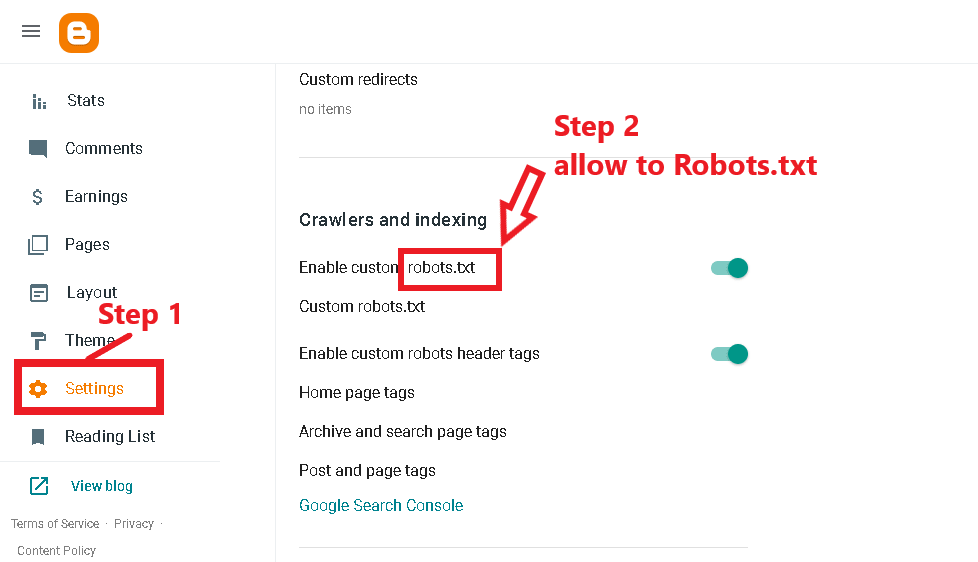

Due to the recent update in Blogger, the method of adding the Robots.txt File has changed. Now, you need to go to Settings, then navigate to the “Settings” section, and there you have to enable “Custom robots.txt” to allow it.

Whenever you want to make your blog SEO-friendly, Robot.txt File is used. However, most people tend to ignore it. But Blogger creates a best Robot.txt File for your posts.

But here, let me tell you that if you have migrated your blog from Blogspot to WordPress, then you should definitely use this feature.

In this way, you can easily use Robot.txt File in your Blogger. However, I would advise you to use this feature only if your website is on Blogger. And if your website is on WordPress, then definitely use this feature.

Use our free robots.txt file generator tool to create file in blogger

How to add robots.txt file in WordPress?

Adding a Robots.txt File in WordPress is slightly different from Blogger. You typically need to use a plugin or manually create and upload the file to the root directory of your WordPress installation. Here are the general steps:

- Using a Plugin: You can install an SEO plugin like Yoast SEO or rankmath or All in One SEO Pack, which includes a feature to edit your Robots.txt File. After installing the plugin, navigate to its settings and look for the option to edit Robots.txt. From there, you can customize the file as needed.

- Manual Creation and Upload: If you prefer not to use a plugin, you can manually create a Robots.txt File using a text editor like Notepad. Include the directives you want, such as specifying which parts of your site to allow or disallow for search engine crawling. Once you’ve created the file, save it as “robots.txt” and upload it to the root directory of your WordPress installation using an FTP client or your web hosting control panel.

After adding or modifying the Robots.txt File, it’s a good practice to test it using Google’s Robots.txt Tester tool in the Google Search Console to ensure it’s set up correctly and is functioning as expected.

Other information around Robot.txt File

| Field Name | Description |

|---|---|

| File Name | Robot.txt File |

| Where Used | On the website |

| Purpose | To give commands to search engines |

| How to Add | Through WordPress Yoast SEO plugin or automatically by Google in Blogspot |

Other Links –

Very much informative